This year we embarked on a new adventure with Pega. Pega wants to phase out Amazon Web Services (AWS) Transit Gateway (TGW) for quite some time. They want all customers to switch to AWS Private Link (PL) . AWS TGW is used to connect the Pega SAAS environment to internal environments including VPN traffic and telephony integration (CTI). Most Pega customers have a lot of back-end integrations.

Rationale

Why, we wondered, would Pega want to phase out the TGW? The technology is perfect. You simply link up the Pega SAAS network with other AWS environments and possibly legacy networking using VPN. And then why replace it with a point to point solution like AWS PL?

After a while it dawned on us. When linking networks you expose a lot of assets, which can be a security risk. Or actually, it is off course. From our Pega servers we could access virtually any other server within the organisation. Suddenly setting up a Private Link was a lot more attractive.

Since I am not an AWS network expert I onboarded Jaap Poot of Nubibus consulting, one of the top AWS network engineers available and we set off on our journey to create a new reference architecture.

Starting point

As a starting point we considered a setup of a Pega SAAS solution (as hosted by Pega for many customers) accessible from an internal company network only. With 10s if not 100s of backend APIs to call. With a CTI (telephony) link, other platforms calling a myriad of APIs hosted on Pega using custom service packages, browser traffic for internal staff accessing the interaction and other portals, et cetera. So a complex setup with links that all need to be migrated from the TGW to PL…

Design by doing, experiment #1

A good brainstorm is the best way to kick off any project. First question is off course how PL works. So we experimented a little using two separate AWS environments.

It turned out that we could enable a communication channel from environment Pega SAAS to environment Customer and vice versa using two AWS PL combinations. First we setup a PL endpoint service in both accounts and a PL endpoint. Those should obviously point to each other. AWS links them up automatically. And the link is achieved. But how to call this link from Pega or vice versa? On the Private Link service a DNS name is generated by AWS which is a bit weird, but usable. For example : vpce-0d89041f-g5m.vpce-svc-0959.eu-central-1.vpce.amazonaws.com. Well, that can be called from any system right?

On the PL service you have the option to assign a private DNS to make it a bit easier. Any DNS name can be used as long as you own the hosted zone. You will need to place DNS verification records in your hosted zone to prove domain name ownership, which is normal. We chose to use something like gateway.com . And that worked too! Brilliant. First test complete. We understood the technology. Now we needed a design.

Requirements

While thinking about the design the list of requirements became clear. And it was not one for the faint hearted.

- The Pega system should not be reachable from the internet directly, all incoming traffic to pega should be behind a firewall. So DXAPI traffic, chat servers et cetera. Outgoing traffic from pega to other systems is allowed.

- API traffic from Pega to any system we want within the customer network should be supported using any HTTP protocol (SOAP, Rest, …).

- It should be possible to easily hook up new systems or change our back end systems to link to other environments. Most companies use a environment setups for dev, test, staging, pre prod and production environments. And they should all have the option for different linking to back end systems.

- All traffic should be encrypted by default, this is a best practice which we like to follow

- Setting up a PrivateLink for every system is rather cumbersome, so we would prefer it if we could use just one to lower the maintenance effort.

- But … some customers have legacy back ends from the days of the flintstones, so we need to support https termination. And then switch to http.

- And then there is CTI. This mostly means TCP level communication with dynamic ports as we found out during our experimentation.

- When on location or working from home most people use trusted networks or VPN to connect to those trusted networks. Browser traffic to the AWS SAAS environment should be supported.

- Links need to work all the time, so failovers must be integrated in the solution.

- Any security threats or changes to configurations should be installed and activated over night.

Experiment #2

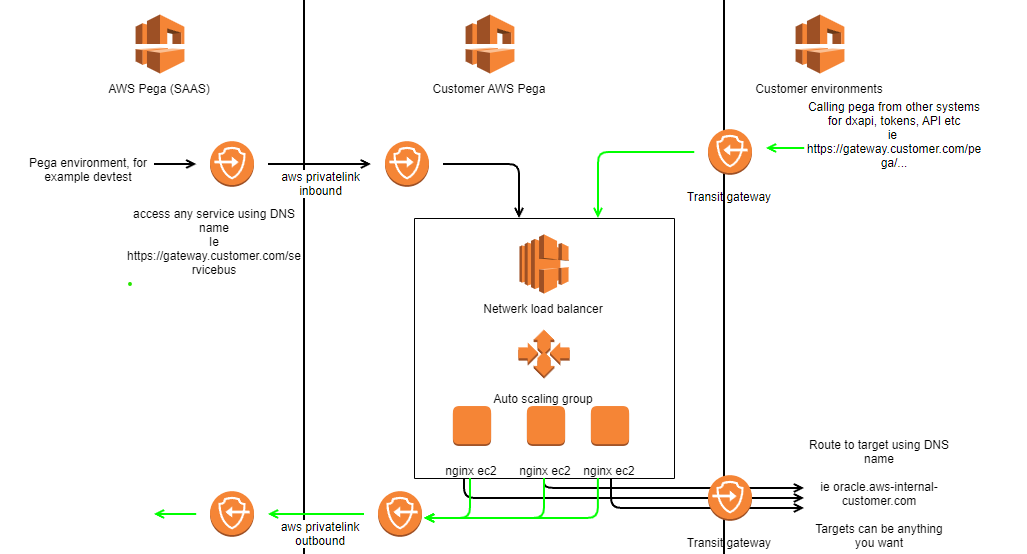

Based on the list above and previous experience with AWS and building proxies we built up an architecture an AWS using nginx to route traffic from and to the Pega SAAS environment. It sort of looks like this.

I will explain the picture and setup a bit more.

Traffic from pega to customer network

The principle is based on the AWS PL using a private DNS name which Pega can call. NGINX will be setup as a proxy. This is a standard feature of nginx supporting SSL termination. Using sub folders on the dns name all kinds of backend systems can be supported. For example : gateway.customer.com/oracle will be routed to oracle.backend.customer.com, gateway.customer.com/sqlserver will be routed to sqlserver.azure.com, gateway.customer.com/customerinformation will be routed to customerinfo.some-aws-environment.customer.com.

Using standard AWS features like load balancers, auto scaling groups and ec2 instances uptime is guaranteed.

The nginx configuration for all ec2 instances is the same. It would be logical to store the nginx configuration on an EFS volume and make sure that the ec2 instances read the config from this fileshare.

All these components will be hosted on AWS in a private network segment so access from the internet is never allowed.

Basically this setup fulfills these requirements :

- The Pega system should not be reachable from the internet directly – achieved by using private networks only

- API traffic from Pega to any system we want within the customer network should be supported using any HTTP protocol – achieved using nginx.

- Easily hook up new systems or change our back end systems to link to other environments.

- Have the option for different linking to back end systems. – Every AWS PL link will have their own AWS environment. So traffic can be completely seperated.

- All traffic should be encrypted by default – on nginx we install a certificate. There is a catch, read on to learn more.

- Setting up a PrivateLink for every system is rather cumbersome, so we would prefer it if we could use just one to lower the maintenance effort. – in this set up we only need one

- Legacy back ends using http – nginx can handle this, this is standard out of the box

- And then there is CTI. This mostly means TCP level communication with dynamic ports. – lets try to route this over nginx

- Links need to work all the time, so failovers must be integrated in the solution. – Load balancers and auto scaling will ensure this

- Any security threats or changes to configurations should be installed and activated over night – add a scheduled task in the ec2 to restart every night

The other requirements, especially CTI, we were not sure about. So we decided to just give it a go with this setup.

Certificates

Certificates are a bit difficult on AWS when used in combination with nginx. Nginx expects to be able to use the private key of a certificate, and AWS will never give you the private key of any of their certificates. This means you will have to setup a DNS name not linked to AWS to use for your gateway. Not a hard thing to do, but something that needs to be arranged.

Deploy on AWS

For deployments on AWS I have chosen Python CDK. Since a lot of things need to be arranged in the environments the ability to use a loop for for example opening up ports on the load balancer target groups is very helpfull in keeping things manageable.

And then we failed …

After investing a lot of time in setting up a proof of concept we were successfull in a number of things.

- Links from pega to any back end system could be made for API calls

- Setting up a new link is a breeze, just edit the nginx.conf file and restart the nginx process or ec2 machines

- Calling from the customer network to pega works perfectly for APIs.

And we failed on these points …

- CTI links did not work

- Browser traffic from the customer network to pega did not work

Learnings from this setup

CTI. It turned out that CTI traffic in our case is TCP level traffic and uses a lot of dynamic ports. So we have to think of something else for CTI …

Forwarding Pega. In this case a sub folder will not work, since Pega expects to own the URL. So a seperate dns name should me used. For example : devtest.gateway.customer.com. And then forward this to Pega using a seperate server block in nginx. This means that we need to upgrade to a multi domain wildcard certificate. Fortunately we already owned the DNS and private key so this was an easy fix.

The DNS name to access pega for API traffic, DXAPI or browser traffic needs to be setup a in route 53 and then hook that up to the load balancer. Nginx will then forward this traffic to the Pega AWS SAAS environment using the DNS name on the privatelink endpoint.

Final reference architecture

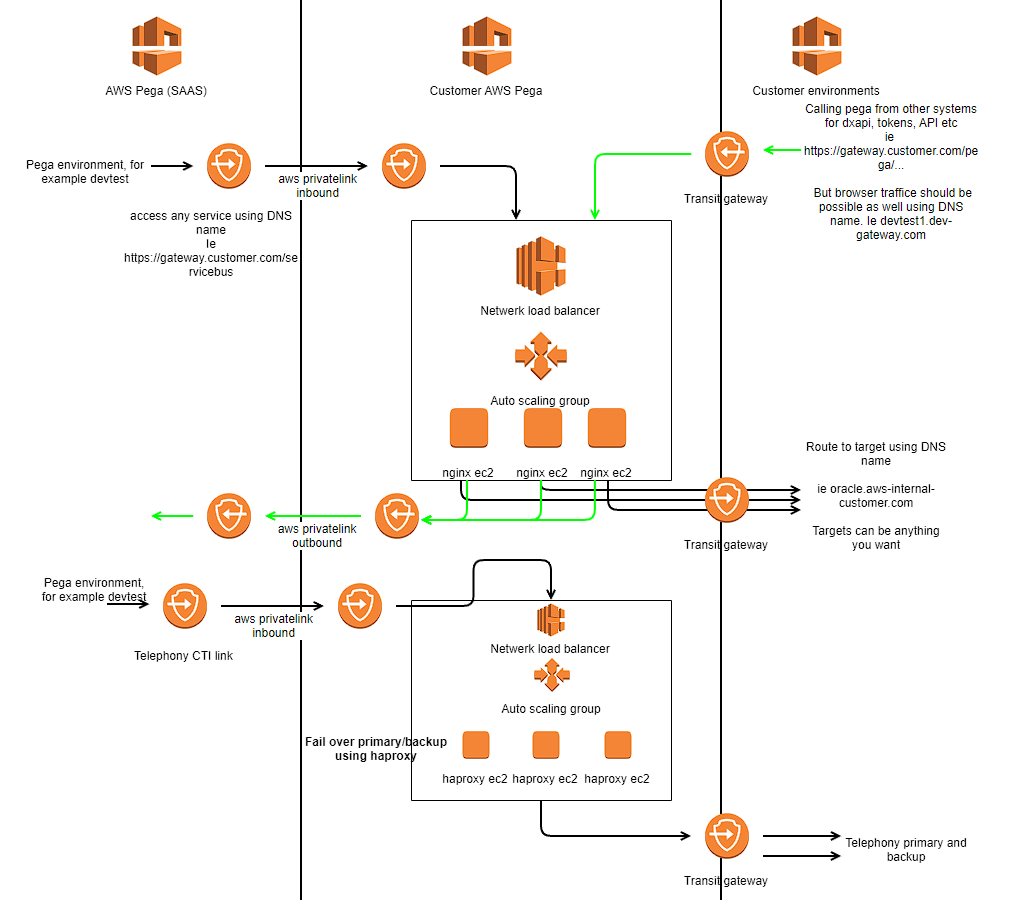

Since the solution worked like a charm we decided to add on a HAProxy instance in our ec2 servers to support TCP level proxying. The final reference architecture ended up like this.

For cost reasons we decided to use the same load balancing group/asg/ec2 pool. You could consider using a seperate channel for CTI. The primary/backup servers for CTI are moved to the HAProxy . This you would normally setup in Pega, but due to port clashes between primary and backup servers HAproxy cannot route this properly. HAProxy is however more than capable to poll the back end systems and switch between primary and backup.

Conclusion

The reference architecture as described above for linking the Pega SAAS environment is a possible solution to replace or setup links from Pega SAAS to customer systems. The architecture uses proven technologies and is expected to function as a reliable and safe proxy. It can be a solid basis for your own designs.

Improvement points on the architecture are obvious.

- If you need to use HAProxy for phone traffic then why not for the rest of the traffic?

- How are you going to do maintenance? Logging in using ssh sessions to replace certificates or edit an nginx.conf in vi is not within the skillset of most pega staff. So setting up a small maintenance portal could be a good option.

- From earlier experiments we have found that API gateway is not the best option for these types of solutions. But why not use AWS Fargate? That would lower maintenance even further.

And maybe more? Please let me know if you have feedback or follow up questions I can address in my blog. You can reach me at bgarssen@quopt.com . I would love to hear from you!